In recent years, the landscape of artificial intelligence (AI) has been dramatically reshaped by the advancement of large language models (LLMs). Spurred by the efforts of tech giants like OpenAI, Meta, and Google, these colossal networks, often composed of hundreds of billions of parameters, have proven to be astonishingly effective in discerning patterns and generating coherent responses. However, the impressive capabilities of these models come at a substantial cost—both financially and environmentally. As we witness the emergence of small language models (SLMs), the conversation about optimization in AI shifts towards a pragmatic approach that could redefine how we harness the power of machine learning.

The Price of Power: The Burden of Large Models

The training of massive language models is a formidable endeavor that requires an extraordinary amount of computational resources. Google’s investment in training its Gemini 1.0 Ultra model reportedly reached $191 million, a figure that raises eyebrows and poses significant questions regarding sustainability in AI development. Each interaction with these LLMs, such as querying ChatGPT, is often tenfold more energy-intensive than a traditional Google search, according to research from the Electric Power Research Institute. The environmental implications of such power consumption cannot be ignored, calling into question the future viability of perpetually growing models in a world increasingly concerned with carbon footprints and energy efficiency.

Small Language Models: A Practical Alternative

While LLMs undoubtedly serve their purpose in generating generalizable solutions, small language models are championing a different philosophy—one centered around specialization and efficiency. With a parameter count usually topping out around 10 billion, SLMs excel within narrowly defined tasks, such as healthcare chatbots, summarization tools, or data aggregation applications. Carnegie Mellon University’s Zico Kolter highlights that for numerous applications, an 8 billion-parameter model offers considerable performance. This shift not only enables a more accessible computational framework but also democratizes AI by allowing users to operate these models on personal devices, like laptops and smartphones.

Innovative Training Techniques: Knowledge Distillation and Pruning

To capitalize on the advantages of small models, researchers are employing innovative training techniques that maximize efficiency. One of these methods, knowledge distillation, involves leveraging the prowess of large models to enhance the performance of smaller models. Essentially, the larger models create a high-quality dataset, effectively acting as a sophisticated tutor for their smaller counterparts. Kolter’s assertion that SLMs can perform commendably with fewer data points underscores the importance of utilizing nuanced and clean training sets over the disorganized mass of raw data typically scraped from the internet.

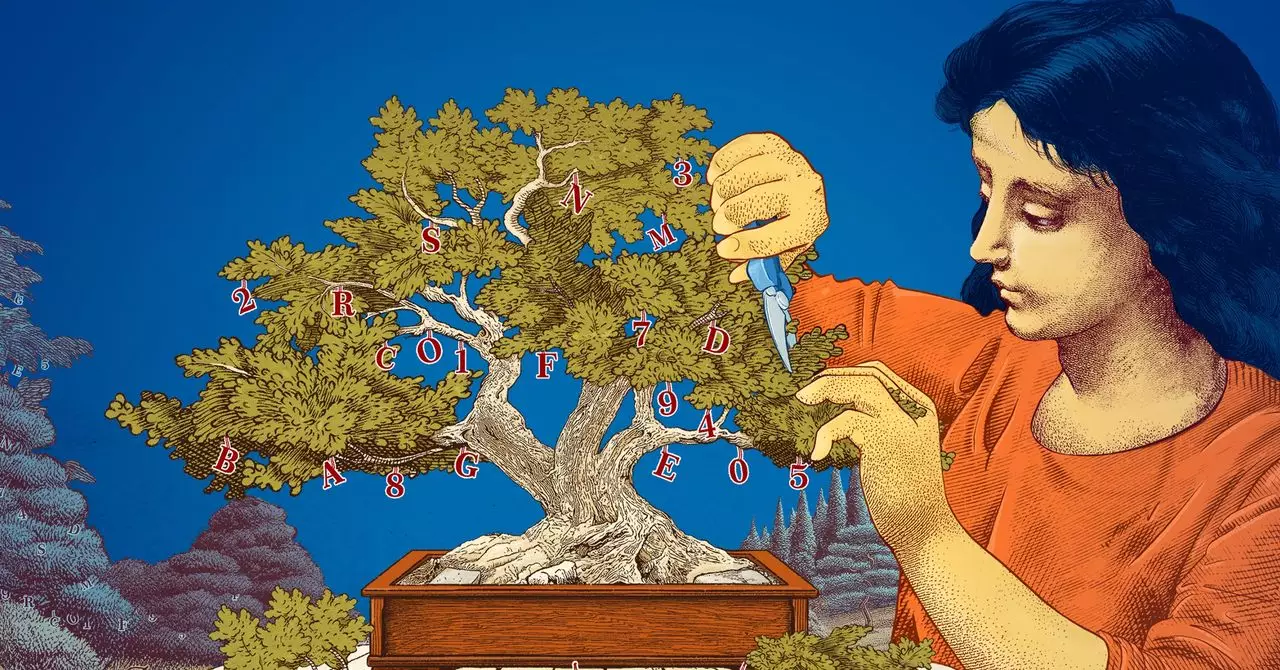

Moreover, researchers are exploring strategies akin to “pruning,” a concept adopted from biological neural networks, which enhances efficiency by reducing unnecessary connections. This method, conceptualized in a seminal 1989 paper by Yann LeCun, demonstrates that up to 90% of parameters can be discarded without compromising model performance. The idea of optimal brain damage not only streamlines the model but also fine-tunes it for specific tasks, further enhancing its applicability.

Lower Risk, Higher Innovation: The Research Implications

For the AI research community, experimenting with smaller language models offers a less risky environment for testing new concepts. With fewer parameters, researchers can safely explore innovative ideas without the financial and computational stakes associated with massive models. MIT-IBM Watson AI Lab’s Leshem Choshen emphasizes this advantage, noting that SLMs permit a higher degree of experimentation and creativity in model development. As the industry becomes more inclined towards transparency, the interpretability of these smaller models can foster a deeper understanding of their operational intricacies.

Balancing Act: The Future of Model Size in AI

While large language models will undoubtedly continue to play a vital role in complex tasks like generalized chatbots and image synthesis, the existence and optimization of small language models represent a critical pivot toward practicality and accessibility. These efficient models not only save on computational costs, time, and resources, but they also engage a broader audience in AI development. As researchers find themselves increasingly at a crossroads between ambition and sustainability, the urgent need for versatile and energy-efficient models has never been clearer.

The emergence of smaller, task-specific language models may not only redefine collaboration within the AI community but also signal a paradigm shift in how we perceive the future of intelligence, encouraging innovations that resonate with the complexities of real-world applications while consciously addressing the environmental impasse faced by larger AI models.