In an age characterized by rapid technological advancements, the rise of artificial intelligence (AI) has introduced both remarkable opportunities and significant threats. One of the most alarming developments is the proliferation of AI-generated fraud, which poses an existential threat to organizational integrity and individual security. Recent findings reveal that approximately 85% of corporate finance professionals perceive AI-driven scams as a severe concern. Alarmingly, a substantial portion of these professionals—over half—have already experienced the repercussions of deepfake technology, which has turned into a formidable weapon in the arsenal of fraudsters.

The start-up AI or Not has emerged in this context, raising $5 million in seed funding to develop cutting-edge solutions aimed at “detecting AI” across various forms of media. This funding round, spearheaded by Foundation Capital and supported by other notable investors, underscores a growing acknowledgment of the urgency to combat AI-fueled deception. The potential financial impact is staggering; projections suggest that if left unchecked, generative AI scams could lead to losses exceeding $40 billion in the U.S. over the next two years alone.

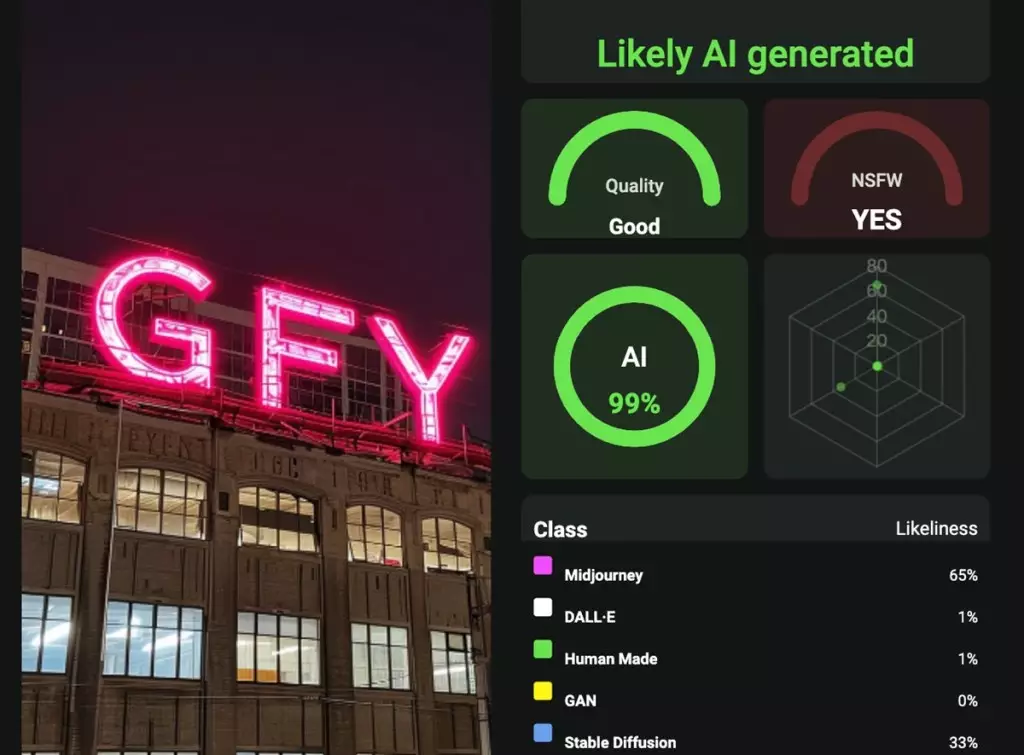

AI or Not employs proprietary algorithms designed to differentiate authentic content from generated media. This approach addresses a crucial need for safeguarding the digital environment where misinformation and deception are increasingly pervasive. From deepfake videos impersonating public figures to AI-generated audio and music infiltrating mainstream platforms, the challenge is multifaceted and evolving. The need for innovation in detection methods is urgent, particularly as AI technology continues to advance.

Zach Noorani, a partner at Foundation Capital, articulated the pressing nature of this challenge, stating that our traditional methods of assessing authenticity—primarily through our senses of sight and sound—are now insufficient. AI or Not’s proactive measures aim to close this gap by providing organizations and individuals with the tools necessary to navigate the complexities introduced by generative AI.

The increasing backlash against tech giants like Meta serves as a clear indication that public demand for authenticity and transparency is at an all-time high. Consumers and organizations alike are beginning to recognize the economic and social implications of misinformation, spotlighting the urgent need for reliable verification methodologies.

AI or Not leverages its innovative technology to offer solutions designed to ensure that digital interactions remain trustworthy. By developing algorithms capable of detecting AI-generated content and fraudulent activity, the company plays a pivotal role in restoring faith in digital mediums. This is particularly crucial for individuals and entities wary of the risks associated with deepfakes, especially in sensitive contexts such as finance and governance.

Positioning for Future Challenges

The company’s recent funding will be pivotal in expanding its capabilities to tackle the rising tide of AI-related threats. According to Anatoly Kvitnisky, CEO of AI or Not, this capital injection will allow the company to enhance its tools and methodologies significantly, aiming to create a safer digital landscape. The objective is clear: to empower users with the means to detect and neutralize AI-assisted fraud proactively.

As AI technologies continue to evolve, the urgency for adaptive strategies becomes increasingly apparent. AI or Not’s endeavor to innovate in this space is not just a business opportunity; it is a societal necessity. By equipping users with powerful detection tools, the company aspires to mitigate the harm that can stem from misinformation and fraudulent actions.

As we advance further into the AI-dominated landscape, it is imperative for organizations, governments, and individuals to recognize the risks associated with generative AI. The work done by AI or Not is a crucial step towards creating a robust framework for digital authenticity. The company’s commitment to enhancing AI detection not only aims to foster trust in digital communications but also seeks to protect vulnerable populations and enterprises from the creeping risks of AI misuse.

As AI continues to unveil its transformative powers, it is equally vital that we develop resilient defenses against its potential for exploitation. Initiatives like those of AI or Not provide a beacon of hope in an increasingly complex digital world.