In recent years, artificial intelligence (AI) has made significant strides, particularly in the realm of natural language processing. Large language models (LLMs) like GPT-4 have emerged as especially impactful, revolutionizing tasks such as text generation, coding assistance, and machine translation. Researchers have now uncovered intriguing insights highlighting an “Arrow of Time” effect—a phenomenon demonstrating that LLMs exhibit a notable discrepancy in their predictive capabilities, favoring future predictions over past ones. This discovery not only challenges our comprehension of how such models grasp language, but also raises pertinent questions about the nature of temporal processing in artificial intelligence.

At their core, LLMs operate through a simple yet powerful mechanism: predicting the next word in a sequence based on the context provided by preceding words. While this forward predictive capacity has garnered attention, the less-explored territory of backward prediction—attempting to ascertain the preceding word from subsequent terms—has unveiled surprising limitations in these models’ performance. This distinction reveals a fundamental asymmetry in how language models engage with text, indicating that their understanding may be more intricately tied to the forward flow of time rather than a symmetrical grasp of language structure.

Unraveling the Research Insights

The research journey began with Professor Clément Hongler and Jérémie Wenger, who endeavored to test language models’ ability to craft narratives in reverse order. Collaborating with machine learning expert Vassilis Papadopoulos, they employed various architectures, including Generative Pre-trained Transformers (GPT), Gated Recurrent Units (GRU), and Long Short-Term Memory (LSTM) neural networks, in their assessments. The results were consistent and revealing: LLMs struggled with backward predictions more than their forward counterparts, signaling a universal performance disadvantage when asked to reverse the narrative progression.

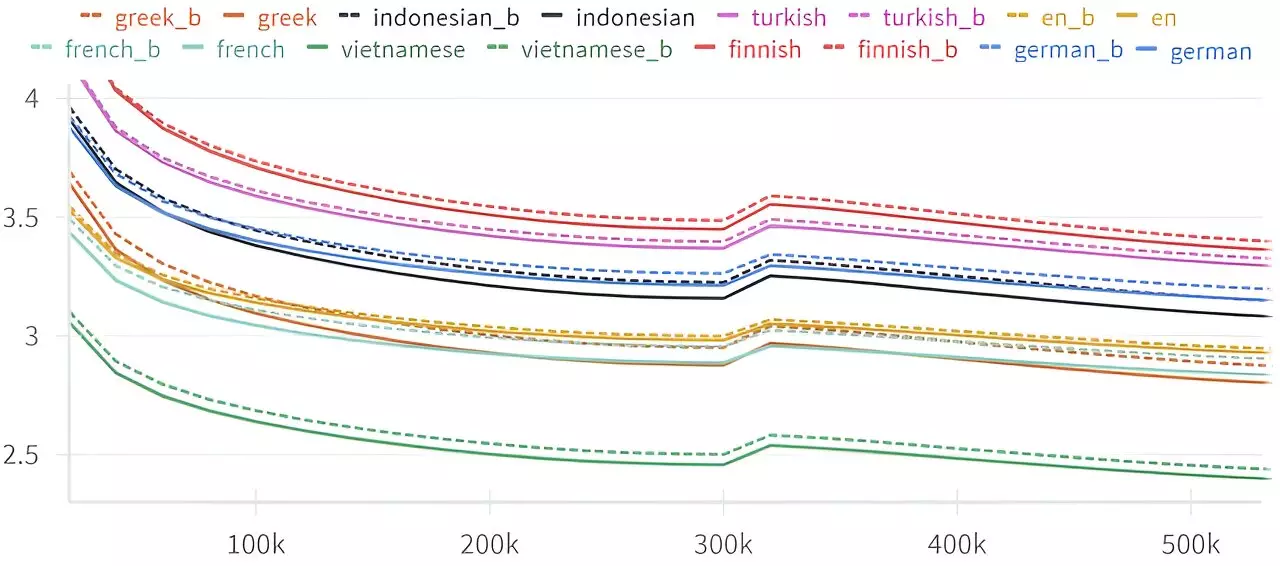

The implications of these findings are profound. They suggest not only a systematic bias in the models but also hint at deeper insights into the structure and flow of language itself. Hongler notes that the phenomenon remains consistent across different languages and types of LLMs, establishing that forward predictive efficacy is an inherent trait of these models. This mirrors earlier inquiries in the field of information theory by Claude Shannon, where the predictability of subsequent characters was juxtaposed against prior ones. Despite theoretical parity in difficulty, the human experience demonstrated a preference for forward prediction—a nuance that finds a counterpart in modern AI models.

This newfound understanding of AI linguistic capabilities extends beyond temporal bias in prediction. It weaves a complex tapestry linking defined properties of language, the processing of information by intelligent agents, and the broader philosophical ramifications concerning the nature of time itself. The phenomenon hypothesizes that the asymmetry observed could serve as a potential marker for detecting intelligence, providing a framework from which to assess AI’s reasoning abilities.

Moreover, the study adheres to more extensive philosophical inquiries regarding causality and the nature of time as an emergent concept. If language models demonstrate an inherent bias toward a forward trajectory in their processing, underlying notions regarding causality and the passage of time become intertwined with our understanding of artificial intelligence. As LLMs evolve, this relationship might catalyze new exploration avenues aimed at deciphering temporal dynamics in both human cognition and artificial systems.

The journey leading to these conclusions bears a unique narrative element—one rooted in an initial collaboration with The Manufacture theater school. Hongler and Wenger’s objective was to develop a chatbot capable of improvisation alongside actors. The premise required constructing narratives that culminated in desired endings. This artistic endeavor inspired the training of chatbots to converse in reverse, igniting a deeper realization of language’s structure and its inherent temporal qualities.

As the research progressed, the discovery of the “Arrow of Time” feature in LLMs emerged as a fascinating lens through which to view language and AI processing. The extent to which these models can emulate human-like reasoning remains a topic of ongoing inquiry. Hongler’s enthusiasm for the research demonstrates how unexpected insights can guide exploration into previously uncharted domains.

Overall, the study not only highlights the intricate connection between LLMs and language processing but also poses critical philosophical inquiries regarding intelligence and the nature of time itself. As researchers continue to delve into these complexities, a richer understanding of both AI capabilities and the fundamental structure of human language awaits exploration, potentially reshaping future developments in artificial intelligence.