The landscape of artificial intelligence is rapidly changing, driven by innovative startups eager to challenge the status quo established by tech giants. One such company making waves is DeepSeek, a Chinese AI startup born from the quantitative hedge fund High-Flyer Capital Management. The company recently unveiled its newest model, DeepSeek-V3, a significant advancement that demonstrates the potential of open-source AI solutions. This article delves into the features and implications of DeepSeek-V3, exploring its architectural innovations and the broader impact on the AI industry.

DeepSeek-V3 boasts an impressive architecture, incorporating a staggering 671 billion parameters. However, it employs a sophisticated mixture-of-experts (MoE) system that only activates a subset of these parameters during specific tasks. This selective activation allows DeepSeek-V3 to provide top-notch performance while remaining computationally efficient. Benchmark tests show it outstrips several leading open-source models, including Meta’s Llama-3.1-405B, while showing performance levels comparable to closed models from Anthropic and OpenAI.

DeepSeek’s architectural approach draws on its predecessor, DeepSeek-V2, maintaining a foundation built on multi-head latent attention (MLA) and MoE configurations. This enables effective training and efficient inference by strategically activating relevant “expert” parameters. Specifically, 37 billion parameters are engaged for each token processed, optimizing resource use while enhancing functionality.

Innovative Techniques: Balancing and Predicting

DeepSeek’s latest release includes two noteworthy innovations that expand its capabilities. The first innovation is an auxiliary loss-free load-balancing strategy. This approach finely tunes the model by dynamically monitoring the workloads on different experts, ensuring a more equitable distribution of tasks without diminishing overall performance. This is crucial for managing the balance in a model as complex as DeepSeek-V3, where overuse of certain parameters can lead to suboptimal responses.

The second innovation is multi-token prediction (MTP). This feature allows the model to forecast multiple upcoming tokens concurrently, which not only streamlines the training process but also triples the generation speed, achieving an impressive output of 60 tokens per second. Such advancements exemplify how the next generation of AI could drastically reduce processing times while maintaining high accuracy and relevance in responses.

DeepSeek-V3 was trained on a colossal dataset of 14.8 trillion tokens, highlighting its commitment to quality and diversity. The training underwent a two-stage context length extension, first increasing the maximum context length to 32K, and subsequently to 128K. Following this, extensive post-training procedures—including Supervised Fine-Tuning (SFT) and Reinforcement Learning (RL)—aligned the model more closely with human expectations and additional operational capabilities.

Furthermore, DeepSeek achieved significant reductions in training costs through advanced techniques, including FP8 mixed-precision training and the DualPipe algorithm for pipeline parallelism. The entire training process consumed approximately 2,788,000 GPU hours—equating to around $5.57 million—much cheaper than the hundreds of millions typically involved in training large-scale models like Llama 3.1, which reportedly incurred an estimated expenditure of over $500 million.

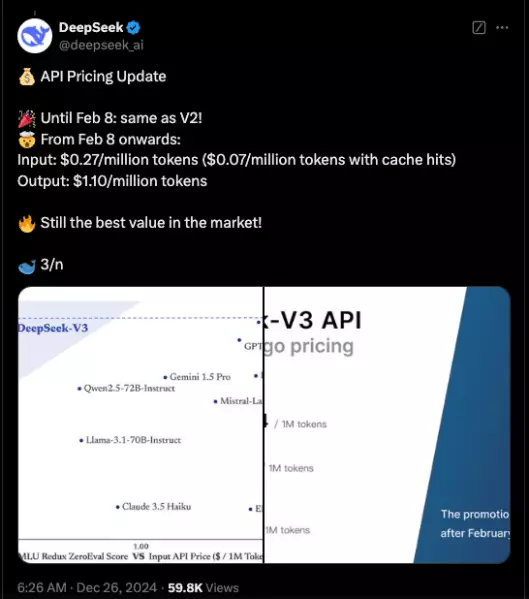

DeepSeek-V3’s performance metrics showcase its superiority over existing open-source models, as it convincingly surpasses Llama-3.1-405B and Qwen 2.5-72B across various tests. Remarkably, it even performs competitively against proprietary models like GPT-4o, although there are noted exceptions in specific benchmarks like SimpleQA and FRAMES, where GPT-4o leads with significant margins.

Particularly impressive is DeepSeek-V3’s performance regarding Chinese language processing and mathematics-related tasks, areas where it notably outshone its competitors. For example, in a mathematics benchmark, it scored 90.2, setting a new standard that Qwen could only approach with a score of 80.

The advancements typified by DeepSeek-V3 signal a critical shift in the AI ecosystem, where the gap between open-source and closed-source AI continues to narrow. The enhancements offered by such open models pave the way for broader industry applications, reducing reliance on major players in the AI arena and democratizing access to cutting-edge technology.

As the AI sector continues to evolve, DeepSeek-V3 stands out as a testament to the potential of open-source projects. With innovative features and impressive performance benchmarks, it not only enhances the available tools for developers and enterprises but also fosters a competitive environment that benefits users globally. By providing accessible AI solutions that rival those of established corporations, DeepSeek is positioning itself as a significant player in the ongoing quest for advanced artificial general intelligence. As the company makes its model available under an MIT license and a commercial API, it is reshaping how industries think about, develop, and deploy artificial intelligence.