In the ever-evolving field of artificial intelligence, particularly in the realm of language models, the concept of collaboration has emerged as a critical factor for enhancing accuracy and efficiency. MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) has introduced a revolutionary algorithm known as Co-LLM, designed to facilitate cooperative interactions between general-purpose language models and specialized counterparts. By enabling these models to interdepend, Co-LLM aims to raise the standard of performance for language generation tasks. The implications of such collaborative mechanisms could redefine how we approach complex inquiries, significantly influencing both academic research and practical applications.

Language models, while powerful, often face limitations in domain-specific areas. These models operate with vast inputs of data, allowing them to generate coherent responses. However, their generalized training can lead to inaccuracies when tasked with specialized questions, akin to an individual attempting to answer a technical inquiry without expertise. This shortfall underscores the necessity for a system that can recognize when to leverage the expertise of a specialized model—much like consulting a colleague well-versed in a specific subject.

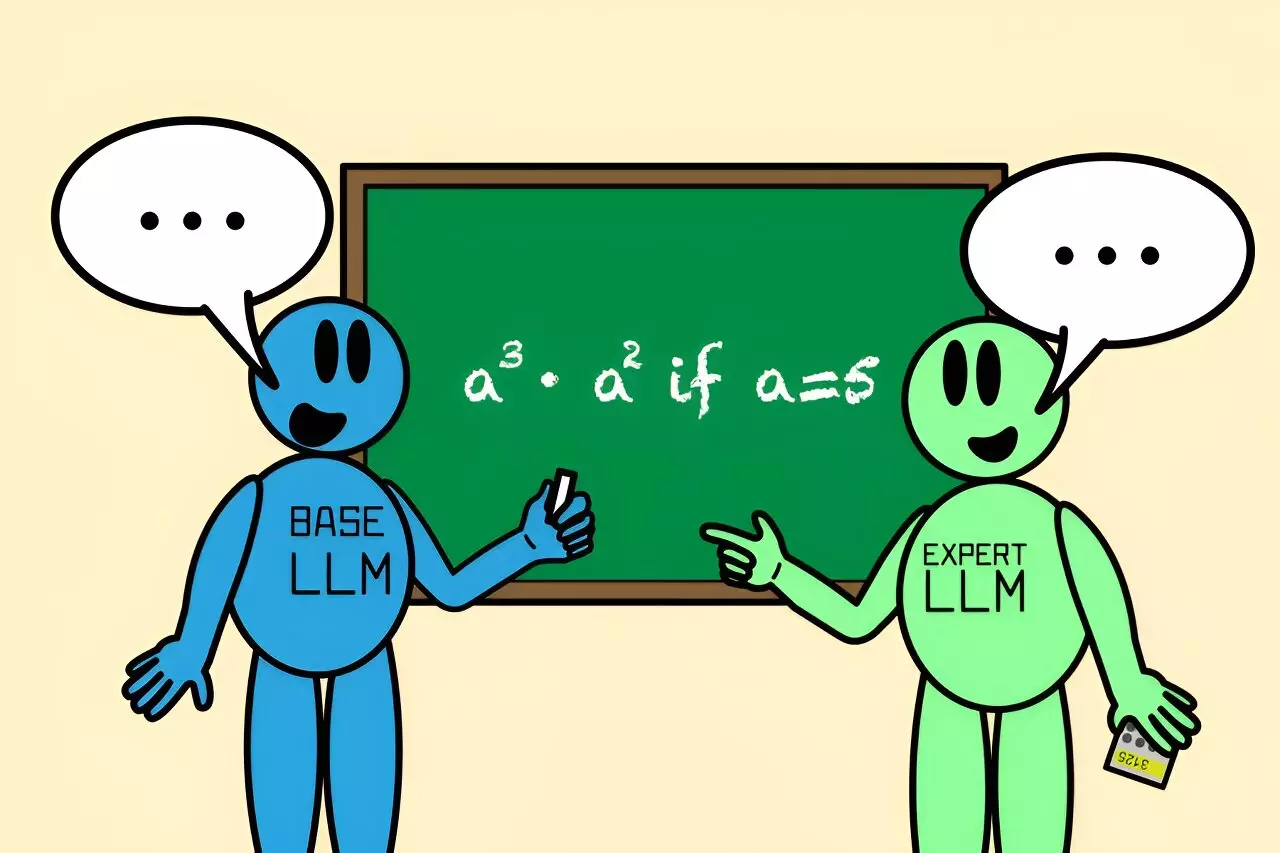

Co-LLM introduces a methodology to bridge this gap in knowledge. It employs two distinct models: a general-purpose model, which initiates the response, and an expert model, which providesive targeted assistance when the situation calls for higher precision. This interplay is reminiscent of collaboration in human problem-solving, where individuals seek help when facing challenges beyond their expertise.

At the heart of Co-LLM is a sophisticated system that monitors the generative process of the general-purpose language model. As this model develops its response, Co-LLM assesses each token—essentially a word or segment of the response—to identify opportunities where expert intervention would enhance accuracy. This constant evaluation acts much like a project manager determining when a specific skill set is necessary, allowing for a seamless integration of specialized knowledge.

The algorithm’s unique “switch variable” makes decisions about when and how the expert model should contribute. This tool is trained through machine learning, empowering the general-purpose model to understand when to “phone a friend.” For example, if tasked with identifying ingredients in a medical formulation, the base model can initiate a response while calling upon the specialized model to supply critical, accurate details seamlessly.

Co-LLM has been tested across various domains, including biomedical inquiries and mathematical problem-solving. By utilizing datasets like the BioASQ medical set, Co-LLM demonstrated the ability to combine the talents of a general model with expert models pre-trained on domain-specific data. This approach displayed marked improvements in responding to medical-related questions, such as elucidating the mechanics behind diseases or the components of prescription drugs.

For instance, a routine query that might stymie a general LLM could yield a precise answer when supplemented by medical expertise. In mathematical scenarios, where a fundamental language model faltered in calculations, Co-LLM successfully partnered the general output with a specialized math model, thereby correcting errors and ensuring accurate solutions.

This collaborative dynamic beneficially transforms the nature of language models. It highlights a significant departure from traditional methods that require simultaneous querying of models, paving the way for more efficient response generation without compromising on quality.

As the capabilities of Co-LLM illustrate, the potential for further enhancement remains vast. Researchers from MIT are exploring avenues to integrate robust self-correction mechanisms, empowering the model to backtrack and rectify inaccuracies that may arise from specialized counterparts. This adaptive learning would not only solidify the reliability of the information but also keep the system updated with new knowledge, particularly in rapidly evolving fields.

Moreover, Co-LLM’s implications stretch beyond academic curiosity. The model could find practical applications in enterprise environments, where precise and up-to-date information is crucial. By ensuring that internal documents and communications align with the most relevant data, organizations could significantly enhance their operational efficiency.

The introduction of Co-LLM marks a pivotal advance in the trajectory of language model development. By prioritizing collaboration akin to human teamwork, the algorithm offers a glimpse into the future of artificial intelligence interactions. The paradigm shift towards integrating specialized knowledge within general frameworks holds promise not only for improved accuracy but also for fostering a deeper understanding of intricate subjects. As technologies evolve, Co-LLM stands as a testament to the power of collaborative intelligence, a harbinger of what could be possible through effective synergy in artificial intelligence.