In an age where our digital footprints are monitored and personal information is increasingly vulnerable, privacy has become a paramount concern for both users and software developers alike. The recent developments surrounding Signal, a messaging platform revered for its robust privacy measures, highlight the escalating battle between user privacy and the technological advancements of corporate giants like Microsoft. With its new Screen Security feature launched on Wednesday, Signal showcases a proactive approach to safeguarding its users from intrusive software practices, particularly those associated with Microsoft’s troubling Recall feature.

The Emergence of Screen Security

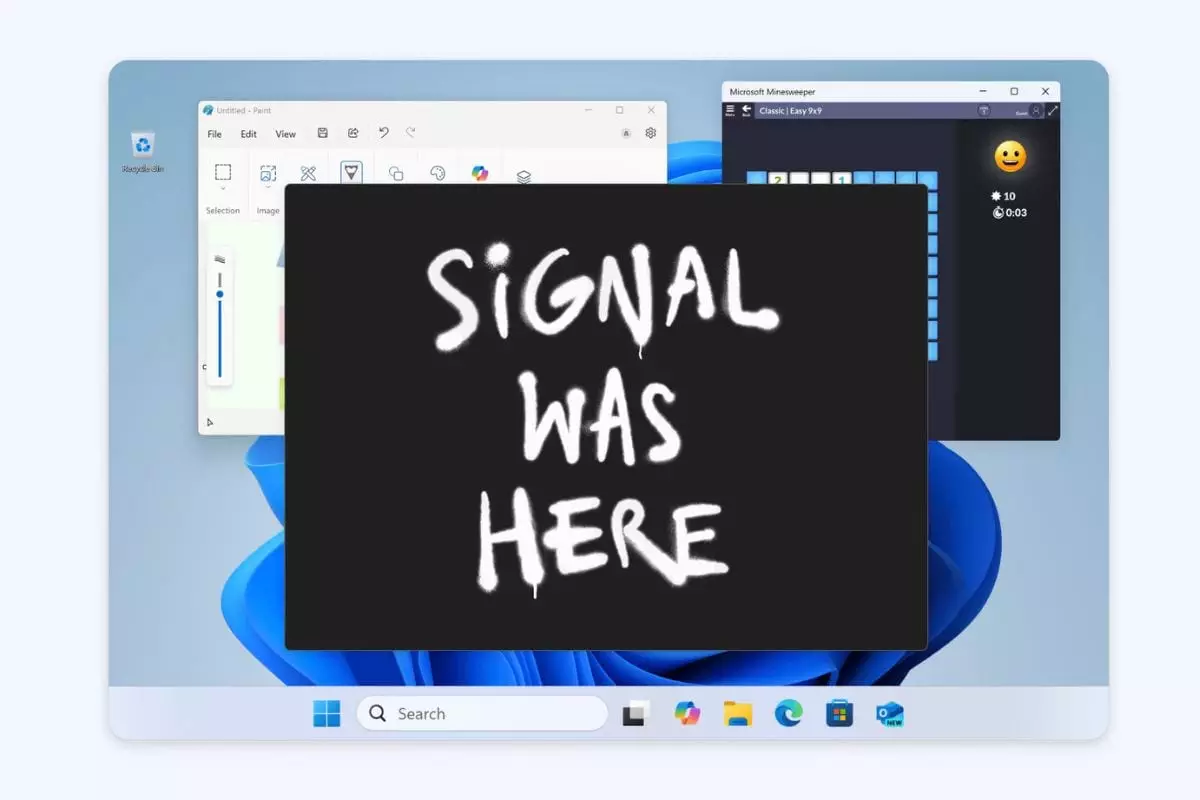

Signal has taken significant steps to shield its user base from what could arguably be labeled as surveillance software. The newly introduced Screen Security feature prevents the capturing of screenshots within the app, thereby keeping sensitive communications private from prying eyes. This just-in-time response comes as Microsoft’s Recall feature—set to be rolled out to all Copilot+ PCs—gathers momentum. Recall essentially serves as a digital history tool that tracks users’ activities by capturing continuous screenshots, prompting legitimate concerns regarding user consent and data privacy.

This reactive innovation underscores a critical flaw in the technology ecosystem: the lack of adequate privacy safeguards in features marketed under the guise of convenience. The troubling reality is that while technologies evolve, user protection measures frequently lag behind, necessitating swift adaptations from smaller, more privacy-oriented companies like Signal.

The Battle of Control: Signal vs. Microsoft

Signal’s decision to implement the Screen Security feature illustrates a growing trend of tech companies fiercely advocating for user privacy. It also highlights an alarming issue where larger corporations, such as Microsoft, impose features that inadvertently compromise user consent. Despite Microsoft’s claims of having reworked its tool after initial backlash, the absence of options for developers to restrict OS-level AI access remains a glaring oversight. Signal’s implementation of Digital Rights Management (DRM) flags, akin to the systems used by streaming platforms, serves as an ingenious workaround to Microsoft’s privacy oversight yet raises several questions about the future of user autonomy in digital spaces.

This clash between corporate ambitions and user rights paints a concerning picture. When a messaging app is compelled to enact stringent measures against OS-level features, it reflects a broader dilemma about trust and the ethics of technology utilization. Signal’s position indicates that its primary guiding philosophy revolves around the inviolable right to privacy, urging the industry to reevaluate how new technologies should engage with user data development.

The Tension Between Privacy and Accessibility

However, the introduction of such a security feature is not without its drawbacks. Signal acknowledges that this heightened level of protection could potentially disrupt accessibility tools for users relying on screen readers and magnifiers. It raises an essential question: can we balance stringent privacy measures with the need for accessibility? The company’s allowance for users to disable the feature showcases a commitment to inclusivity, yet it simultaneously raises the specter of potential misuse through Microsoft’s accessible software tools if privacy restrictions are relaxed.

The dilemma presents an ethical paradox. Should the onus of privacy protection rest solely on the developers, or should the consumers have a more active role in understanding and managing the implications of such features? Signal’s warning accompanying the option to disable Screen Security provides awareness but further underscores the complexity of navigating the tech landscape—a balance that is challenging to achieve in a rapidly evolving environment.

The Future of AI and Privacy in Messaging

As AI technologies continue to evolve, the implications for privacy within messaging platforms will remain a significant concern. Signal’s comments reflect a broader frustration from developers about how features like Recall are introduced without adequate consideration for their implications. The expectation that developers should integrate complex privacy measures as a reaction to the oversights of larger tech companies is neither fair nor sustainable.

Signal’s endeavor to maintain its users’ trust by innovating protective features is laudable, yet it raises essential questions about the broader industry landscape. The hope is that as AI systems grow more sophisticated, those at the helm of these technologies will prioritize a conscientious approach to user privacy, fostering a commitment to principles that protect rather than exploit. In a world deeply intertwined with technology, the commitment to safeguarding individual privacy should be at the forefront of every innovation, rather than treated as an afterthought or a regulatory checkbox.