In a digital age dominated by social media, transparency and user empowerment are essential. Threads, the burgeoning microblogging platform from Meta, has recently introduced an innovative feature termed “Account Status.” This tool aims to bridge the gap between users and their content management on the platform, echoing a growing demand for clarity in digital spaces. With this attentive move, Threads is not just responding to user frustrations but actively promoting a culture of accountability.

Unveiling the Account Status Feature

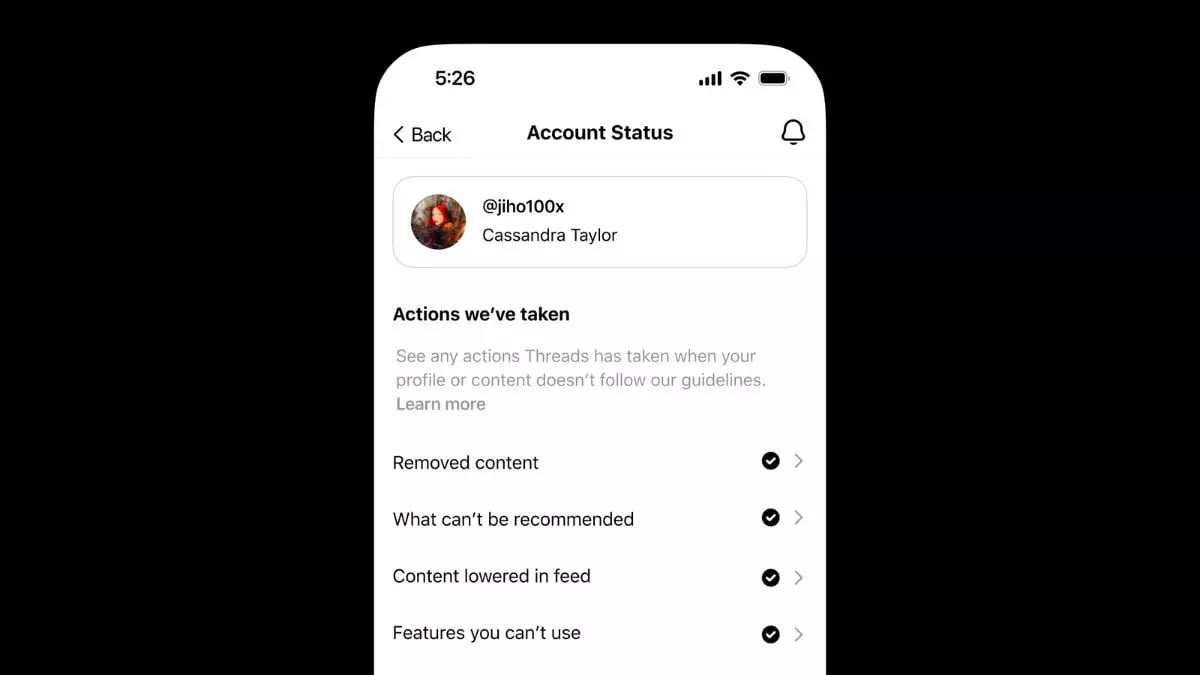

Previously exclusive to Instagram, the Account Status feature is now making waves on Threads, allowing users to gain insights into the treatment of their posts. Users can readily access their account status through a simple navigation path: Settings > Account > Account Status. What’s refreshing about this development is that it empowers users to understand actions taken on their content, from removals to demotions, further elucidating how well their posts align with community standards.

This proactive transparency helps foster a more informed user base. Knowing when a post has faced moderation may enable users to reconsider their content strategies or understand the nuances of community guidelines better, paving the way for more responsible posting and engagement.

Addressing Content Moderation Concerns

Having clarity about when and how content is moderated opens up new avenues for dialogue within the user community. If a user disagrees with the moderation decision, Threads provides a straightforward avenue to submit a review request. This feature puts power back into the hands of users, allowing them an opportunity to have their voices heard in the ever-evolving landscape of digital interaction. Notifications once the review process is complete ensure that users are not left in the dark, fostering a more responsive relationship between the platform and its users.

The implications of this are significant. It potentially reduces user frustration over perceived injustices, as clearer communication can lead to a better understanding of moderation processes. In turn, this cultivates a community where users feel respected and valued, essential for a healthy online ecosystem.

The Balance Between Freedom and Responsibility

While the Account Status feature is a considerable step forward, it reflects a broader challenge faced by platforms like Threads: striking a balance between fostering free expression and ensuring safety. According to Threads, its community standards apply universally and encompass all content types, including AI-generated material. This vigilant approach underscores the platform’s commitment to authenticity and user safety. However, the inevitable trade-offs between user expression and the mitigation of potential harm remain at the forefront of these discussions.

Moreover, Threads allows for some flexibility in content moderation—posts that violate guidelines may still be permitted if deemed of public interest. This nuanced stance shines a light on the importance of context in content evaluation. However, ambiguity in language can often muddle the waters, and users may find part of their expressions stifled by these evolving standards.

The introduction of the Account Status feature certainly advocates for transparency and user engagement, but the challenge lies in how effectively Threads can maintain the delicate balance between openness and oversight as it continues to grow and evolve.